Unlike traditional flow-based bots, LLM-powered agents have to be able to meaningfully handle infinite different customer inputs AND have a solution space that contains infinite correct and incorrect answers. That variability demands a fundamentally new testing approach.

In our last post, we argued for a diverse set of testing and evaluation methodologies with a pass^k mindset (rerun critical tests multiple times) to avoid false confidence.

This follow-up installment answers the next question teams always ask: when should you use each testing and evaluation method?

The short version: don’t test everything all at once. You’ll move faster (and end up with higher quality) by sequencing methods to match the deployment phase, risk, and frequency of each flow.

Below is a practical guide on hard-won lessons from shipping complex AI agent use cases where iteration velocity initially cratered. The fix wasn’t “more testing.” It was prioritizing the right kind of testing at the right time.

The anti-pattern to avoid

Early on, we felt pressure to get every turn “right.” We (and our customers) over-indexed on brittle, turn-specific assertions and fine-tuning before we had enough test volume to see impact × frequency. We were technically progressing, but operationally creating an unscalable motion. The turning point came with the launch of several highly complex billing and survey use cases where our iteration velocity started to plummet.

We stepped back, looked at the tools we built, and re-sequenced our testing stack accordingly. We were able to run 15x more tests with a far greater degree of diversity, leading to 35% faster release cycles and improving accuracy by 20%. Velocity rebounded, deployment effort dropped significantly, and quality improved because we went deeper on the right types of testing at the right time.

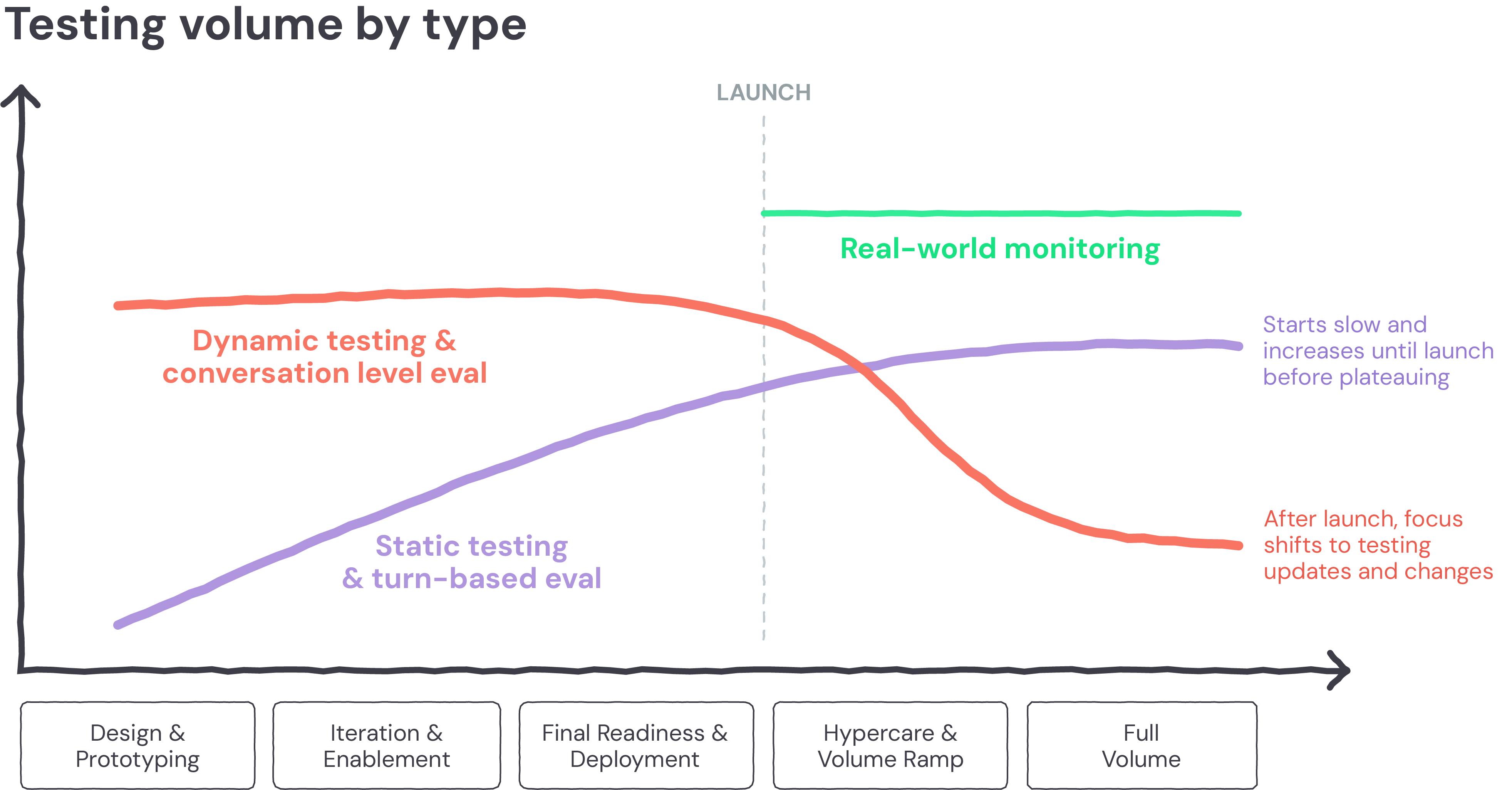

Mapping testing to your deployment motion

Testing and evaluation don’t happen in isolation; they mirror how your AI agent moves from design to launch and beyond. Instead of treating testing as a checklist at the end, think of it as a rhythm that flexes to match each phase of implementation.

The goal isn’t to test everything everywhere, but to apply the right level of rigor and coverage where it matters most.

Testing methods: dynamic vs. static

Testing methods define how you measure and validate your AI agent’s performance, whether by simulating interactions (dynamic) or replaying known cases (static).

Dynamic testing

Dynamic testing simulates customer conversations to measure performance under realistic, varied conditions. Because this can be automated, it’s your main driver for validating whether the AI agent behaves naturally, consistently, and successfully under different phrasing, order, and context scenarios.

When to use: Throughout development, from early design exploration through post-launch monitoring though post launch it may taper and be more critical with major updates.

Best for: End-to-end validation of simulated real-world scenarios and quickly identifying major failures in the agent.

Example: Running a large number of simulated customer conversations to ensure the agent can recognize alternate ways of asking for a refund or password reset and still resolve the issue successfully.

Static testing

Static tests snapshot specific conversation states and replay them against updated versions of the agent to confirm nothing regresses.

When to use: As a supplement to dynamic testing in error cases when you’ve started, slowly ramping over time before stabilizing as the agent is optimized. More heavily used when there is the need for strict turn-by-turn adherence:

- Use it selectively early on, only for issues caught during dynamic testing or key “golden” flows.

- As you near launch, expand coverage for compliance-heavy or risk-sensitive areas like authentication, payments, or disclosures.

- Continue building on test cases as a regression set as you go live and leverage for automated testing.

Best for: Regression prevention, compliance verification, version-over-version comparison

Example: After a customer has provided credit card details, the agent validates that using an API call and tells the customer immediately if it succeeded. To test this, you could have a couple of snapshots using correct and incorrect credit card inputs and always run this scenario as an efficient way to make sure the AI agent can perform its core function at a specific time.

Evaluation scopes: conversation-level vs. turn-level

Evaluation scopes determine how granularly you measure your AI agent’s performance, regardless of which testing method you use.

Conversation- or phase-based evaluation

Conversation- or phase-based evaluation measures whether the AI agent successfully achieved the intended business or customer outcome across multiple turns within an entire flow or interaction section, rather than focusing on individual responses. This can also include adherence to an overall flow.

When to use: Use early and often as a path to quickly understand performance and identify opportunities to improve. This approach is resilient to prompt changes, making it ideal for judging overall quality.

- Establish this as your foundation; it scales naturally as the agent evolves and gives you the clearest picture of business impact.

Best for: Flow correctness, robustness to different test scenarios, and identifying logical or outcome-level gaps.

Example: Evaluating a complete refund flow to not only ensure that the refund was successful, but that it followed the correct trajectory (i.e. steps in the right order), and even handling side quests that the customer may have thrown in along the way.

Turn-based evaluation

Turn-based evaluations check whether the AI agent responds correctly or takes the right actions at specific steps of an interaction.

When to use: Start light as this scope zeroes in on specific conversational turns or transitions, but tie into static testing for the purpose of automating regression testing. It’s brittle when used broadly but essential for deterministic or compliance-bound interactions.

- Ramp up before go-live for critical steps like confirmation wording, disclosure order, or sensitive data handling.

Best for: Sequence validation, compliance assurance, enforcing turn-by-turn constraints

Example: The agent must confirm the customer’s credit card immediately after capture, or read a required disclaimer on the second turn.

Bonus Points - Going Live

After going live, offline testing and evaluation remains important - particularly when updating the agent, but now real world monitoring becomes critical. Leveraging the same sort of conversation or phase based evaluations you’ll want to start immediately assessing whether the agent is successfully achieving it’s goals - and doing so in the right way! The more effective your post launch evaluation is on real conversations, the less dependent you need to be on high volume offline testing and the faster your iteration cycles. On top of that, effective real world monitoring allows you to discover additional edge cases and errors to add to your existing static test sets for optimization and regression testing.

The role of a Cresta customer: transparency and empowerment

Testing and evaluation aren’t just internal quality controls; they’re shared levers for trust. Customers approach this process with different expectations: some want to be deeply involved from day one, while others prefer to own acceptance testing and rely on us to manage the rest. Over time, many move across this spectrum as their teams gain confidence and expertise.

Our philosophy is simple: testing should be fully transparent and accessible. Customers should always be able to see how their AI agent is performing, what tests it’s passing or failing, and even run those same production-grade tests themselves. The more visibility and control we provide, the faster they build trust in both the agent’s quality and the process behind it.